The pragmatist's guide to AI-powered content operations

Written by Knut Melvær

Imagine this: Your content team spent three hours yesterday updating metadata across 50 product pages. They'll spend three hours tomorrow doing it again for a different category. By Friday, they'll have invested 15 hours on work that nobody will notice unless it's wrong.

And you might be thinking: "This seems like something we could automate. We need an AI strategy."

You're absolutely right!

But not in the way most companies approach it. You don't need a strategy workshop. You need to get practical.

Meanwhile, content teams feel the AI pressure. Some respond with professional resistance because they recognize it's hard to translate the coordination and context of their work into a chat box. Others have tried AI and found it underwhelming.

But as technologists, we know generative AI is powerful and capable. So how do you get your team to shift their perspective? How do you introduce AI without joining the majority of pilots that stall or fail?

This guide and the AI-readiness test helps you assess your content system's AI readiness and shows you how to run a 30-day pilot that gives you measurable business outcomes.

Do first. Strategize later.

It's easy to fall into the trap: The "we need to figure out AI" question turns into strategy workshops and endless meetings to align the organization. Meanwhile, nothing ships.

Here's the thing though:

The companies succeeding with AI don't start with "How do we AI?". They start with "What's blocking our team?" They pick one repetitive problem and eliminate it. Then they move to the next one. They are going deep and specific.

The difference is fundamental. It's the difference between "We need an AI strategy" and "We need to stop spending three hours on metadata every day." Between transformation theater and actual improvement. Between expensive pilots that stall and tactical wins that compound.

Working with AI is a skill. It's an adventure. You have to get your feet wet and you have to get the repetitions in. But having a plan helps. And solving a concrete problem is how you build that invaluable experience and establish trust in the technology.

When travel-tech startup loveholidays scaled from managing 2,000 hotel descriptions to 60,000, their content team didn't grow. Their content operations did. Mike Jones, their CTO, shared this story at the Everything *[NYC] conference. They automated the repetitive work. And it increased conversion, because the quality was improved especially on the long-tail of less popular offerings.

“All 60,000 of those hotels are now managed by that same content team.” —Mike Jones, CTO of loveholidays

They didn't start with an AI strategy. The team at loveholidays identified an expensive operational chore. Writing and maintaining tens of thousands of hotel descriptions. And eliminated it systematically. Same content strategy throughout. Systematically better operations.

This distinction between content strategy and content operations matters because most AI initiatives fail at this boundary. Content strategy is what you decide to create and why: which topics to cover, what messages to communicate, how to position your brand. It requires business judgment, market understanding, and creative thinking.

Content operations is how you execute that strategy, which can be:

- tagging and categorizing content

- linking and cross-referencing

- validating against guidelines

- translating and localizing

- optimizing for search and accessibility

- managing workflows and approvals

- publishing and distributing

It’s within these points, you'll find that every content team has a backlog of operational work that blocks strategic execution:

"We can't launch in new markets fast enough because translation is bottlenecked."

"Our style guide compliance is inconsistent because manual checking is impossible at scale."

"We're spending more time formatting and distributing than actually creating."

These aren't content creation problems. They're operations problems. And this is where you are going to start looking for opportunities to get practical with AI.

Starting with this question changes everything. You're not chasing AI adoption metrics. You're solving specific operational problems that happen to be solvable with AI. And those should be measurable by business outcomes.

Does your CMS architecture support AI?

Here's what we see: your CMS architecture determines AI success more than the AI itself. The most sophisticated AI hitting a legacy content architecture is like putting a Ferrari engine in a horse carriage. Sure, it has power. But it's still fundamentally the wrong vehicle.

Before you can introduce AI-powered content operations, you need to understand to which degree your content infrastructure supports it.

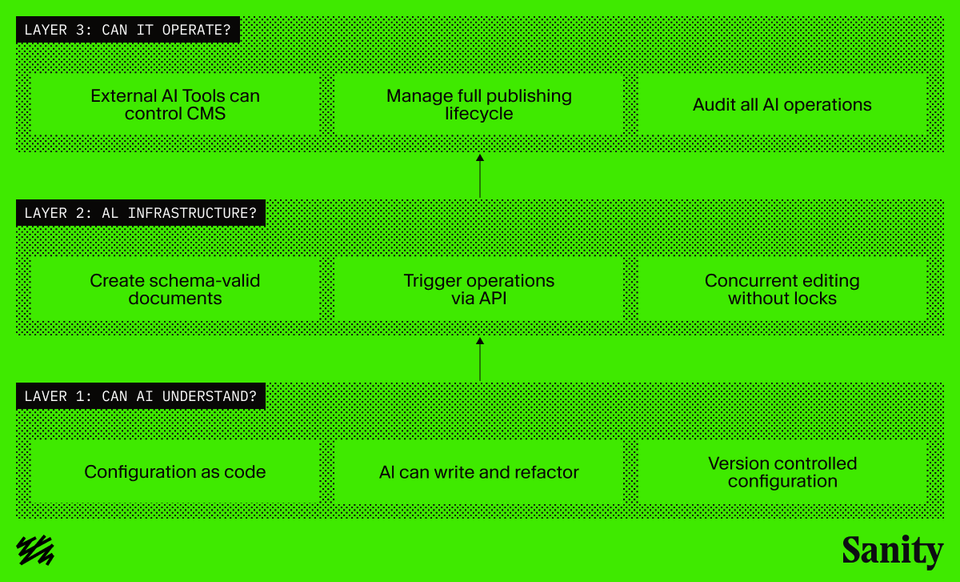

The assessment explores the three levels of succeeding with AI-powered content operations, each building on the previous one.

Layer 1: Can AI understand your CMS?

This is about whether AI can even comprehend how your content is structured. Try this test: Ask Cursor or Claude Code to help you create a content model for a blog with authors, categories, and SEO metadata.

For UI-based CMSes, you get instructions like: "Navigate to Content Model section, click 'Create New Content Type', add field 'title', select type 'Short text'..." AI can write these instructions, but it can't execute them. It can't test them. It can't version control them. When something breaks, AI can't see what's wrong because the configuration lives in a database somewhere, not in code.

When configuration is code, AI can generate and update complete working schemas. It can suggest validation rules based on requirements. It can refactor schemas when requirements change. It can spot issues. You can ask "why isn't this working?" and paste actual code. It can use schema code as context to generate queries, frontend components, and migration scripts.

Internal server error