Your content is now Live by default

Written by Simen Svale, Knut Melvær

Ever had the "Error Establishing a Database Connection: Too Many Connections" message when your site is under heavy load or played whack-a-mole with cache invalidation?

You deploy a critical content update, but you're never quite sure when it will actually hit the site. Maybe you have an mystical web of timeouts, webhooks, and revalidation tags; only to still be refreshing the page multiple times for your changes to be live.

This is why we've developed the Live Content API to make it easy to build live-by-default applications that scale to millions of visitors with just a few lines of code. Like three-ish.

The Live Content API is available in beta, and you can try it now in our Next.js starter, explore the documentation, or watch our recent Next.js Conf talk.

The perfect storm: When caching breaks down

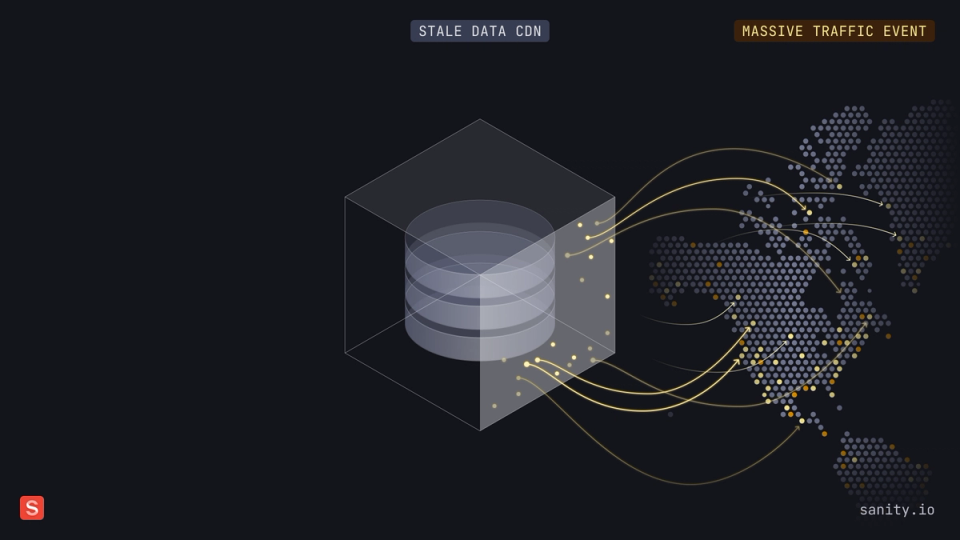

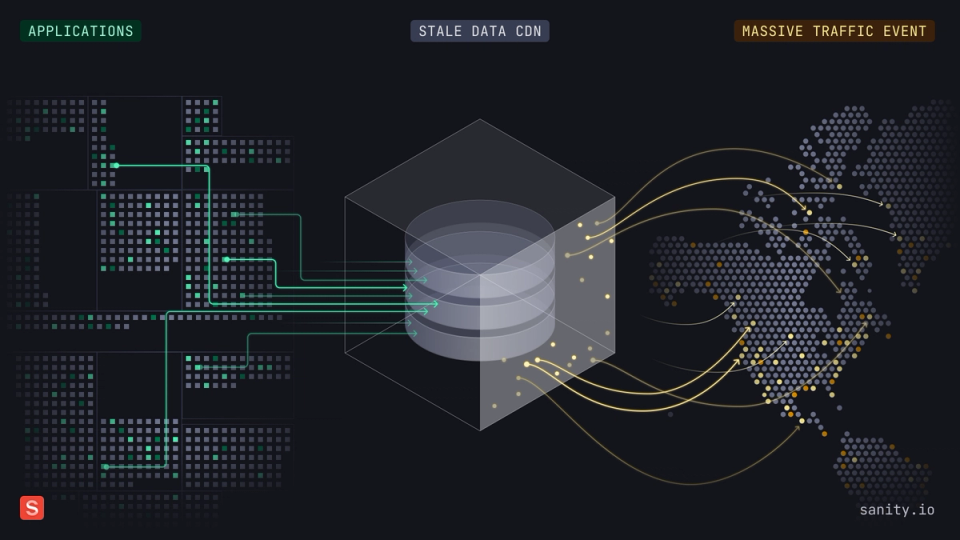

Imagine this scenario: You're running an e-commerce site, and a high-profile entrepreneur called Kim just dropped a instagram post about your latest product. Suddenly, thousands of eager customers flood your site. This is your moment to shine – but it's also when traditional caching solutions start to crumble.

What are your choices?

You're caught in an impossible situation:

- If you keep your CDN cached, visitors might see you feature products that are sold out, letting them try to buy things that are already sold out.

- If you invalidate your cache to ensure fresh content, you're effectively DDOSing on your infrastructure

- Either way, you're likely pouring money into emergency infrastructure scaling or keeping your team up at night monitoring systems. No bueno.

To understand why these scenarios are so challenging to handle with traditional approaches, we need to take a step back and look at how content delivery has evolved on the web.

How content delivery actually works

The web has come a long way from its static beginnings, but many of our fundamental patterns for delivering content remain unchanged. Understanding these patterns helps explain why we're still struggling with the same caching problems decades later.

The classic request-response pattern

The request and response pattern is how we fetch content on the web. When you click a link or type a URL, your browser sends a request to the server hosting the website. The server responds with the requested content, which your browser renders. Simple enough!

But here's something to consider: you now have a cached version of that site in your browser. We've been trained to revalidate this cache by hitting the reload button – and as developers, we even use special hotkeys to make extra sure we're bypassing all cache layers. Hopefully, we'll get fresh content from the server. Right?

This pattern worked well for the early web, but as applications became more dynamic and interactive, we needed more sophisticated solutions.

How we got here

The web was built for a simple client/server model. Take your vanilla WordPress install: when a visitor requests a blog post, WordPress runs PHP code on the server for every visitor. The template code makes a database request, generates HTML by inserting content into the template, and returns this as a response.

This model works fine until your site gets popular. With many concurrent visitors, it becomes resource-intensive and can crash your server. The traditional solution? Add caching plugins. But suddenly, your users aren't guaranteed to get fresh content, even with aggressive cache busting.

As these limitations became more apparent, the industry began moving toward more sophisticated architectures and frameworks.

Modern web caching

When we started to build with decoupled frontends consuming API data, frameworks like Next.js introduced sophisticated caching mechanisms. For example, Next.js typically handles data fetching in components and maps that to children components:

Internal server error